A few riddles

This site is about probability, information theory, and how it helps us to understand machine learning.

Check out this page for more about the content, assumed background, and logistics. But I hope you get a better feel for what this is by checking out the following riddles. I hope some of them nerd-snipe you! 😉 You will understand all of them at the end of this minicourse.

What's next?

As we go through the mini-course, we'll revisit each puzzle and understand what's going on. But this text is not just about the riddles. It's about explaining some mathematics that I find cool and important, with focus on getting solid theoretical background behind current machine learning.

There are three parts to this website.

- First (first three chapters), we will start by recalling the Bayes' rule. Playing with probabilities will help us understand KL divergence, cross-entropy and entropy. Those are the secret weapons that solve all our riddles.

- Then (next three chapters), we will see that minimizing KL divergence is a powerful principle to build good probabilistic models. We will use it to explain most of our riddles, but even better, we'll get pretty good understanding of a super-important part of machine-learning -- setting up loss functions.

- Finally (final two chapters), we will dig into how entropy is related to coding theory and compression. This can give us pretty useful intuitions about large language models.

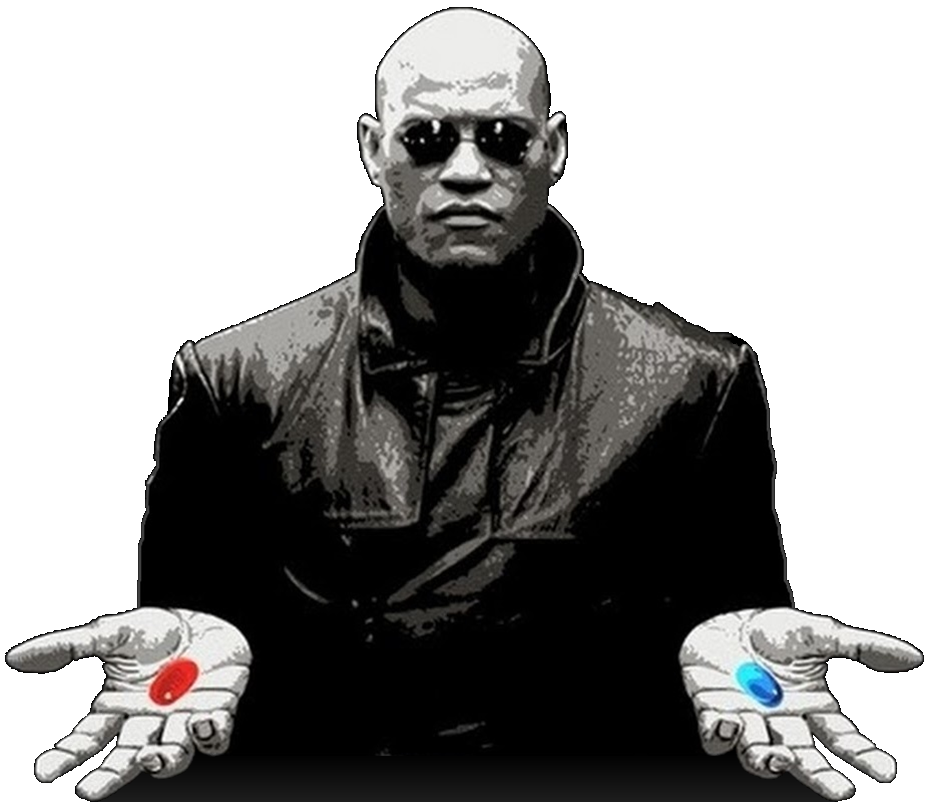

This is your last chance. You can go on with your life and believe whatever you want to believe about KL divergence. Or you go to the first chapter and see how far the rabbit-hole goes.